A Long-term Approach to Combat Deep Fakes

You recently discovered that a criminal broke into your home, stole your most precious belongings, and skipped town. However, you have in your possession a crystal clear surveillance video, and it leads directly to the apprehension of the thief. The case goes to trial and much to your surprise, your seemingly damning video is not admissible in court. In fact, the thief is set free due to lack of evidence.

Why would something like this happen?

Ultimately, the advent of deep fakes served up plausible deniability on a silver platter. If fake videos can be created easily and the technology is widely available, who’s to say the thief wasn’t framed? What if you broke into your own home, hid the valuables, superimposed the thief’s face onto yours, and then attempted to commit insurance fraud? It’s clearly unlikely, but the seed of doubt has been planted.

Unfortunately, this future is already here, and folks are worried. If a robust defense against deep fakes is not developed soon, dire consequences await.

The Problem With GAN’s

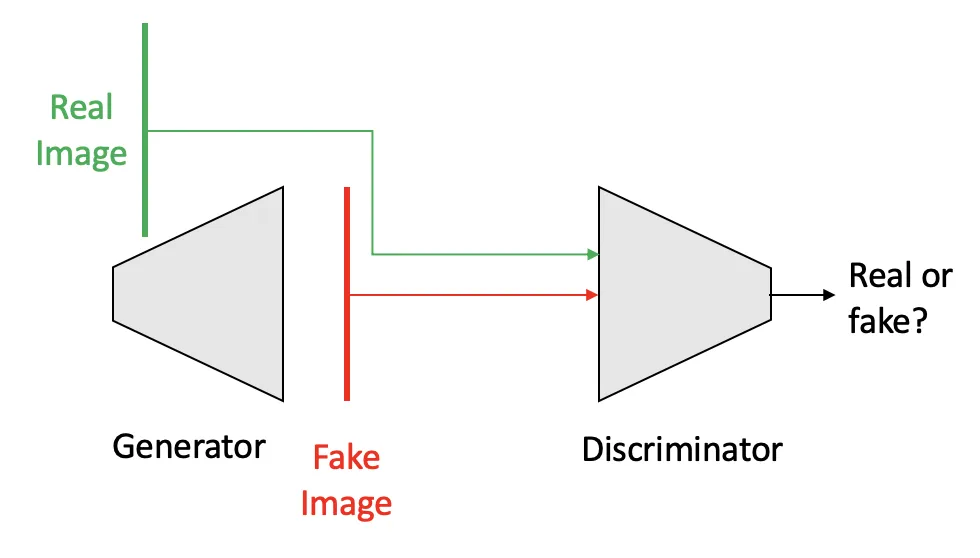

Generative Adversarial Networks (GAN’s), the neural network architecture that sparked the explosion of deep fakes, were introduced by Ian Goodfellow (now at Apple) in 2014. In this architecture, two neural networks compete with each other, where one attempts to generate fake images and the other attempts to distinguish (“discriminate”) between real images and these generated ones. Both networks simultaneously improve at their “jobs”, and at the end of training, the generative part of the network (“generator”) is used to generate the fake content.

High-level overview of the Generative Adversarial Network concept

This seems great, right? Well, sort of. The defense against generated content (the discriminator) is built into the fake generation algorithm itself. Because of this, the battle between fake detectors and malicious actors is always going to be an uphill battle. It’s not a competition to see who has the best algorithm, rather who has the best data set and computational power.

This has a lot of people up in arms, as it shakes the foundation of digital content reliability to the core. There’s a lot of money and trust at stake here: Facebook is offering $10M to whoever develops the best fake detection algorithm.

The Solution: Short Term Approach

A search for better algorithms is ongoing. Massive amounts of resources are currently being called for to develop datasets, algorithms, and computing infrastructure to combat deep fakes, especially as they relate to facets of the election process. This is wonderful and necessary, however, as compute resources become more widely available and accessible to the general user, the amount of resources required to detect these fakes will only compound. As malicious users gain access to more and more resources, further research will only contribute to an ever-escalating arms race.

A Brief Digression

Although it’s possible that a sustainable solution to the deep-fake problem might involve a clever neural network architecture, that future seems unlikely, especially given how GAN’s operate. However, even if such a solution does exist, there is no doubt it will require a large amount of time, research, money, and compute resources. A better approach would be to turn to technology that already exists that can be implemented quickly.

What about public key encryption?

Public key encryption (also known as RSA encryption or asymmetric encryption), works by generating two encryption keys, one that can encrypt a message (the “public” key) and one that can decrypt a message (the “private” key). Many network streams already rely on this technology to communicate securely (you’ve probably heard of certificate authorities). If you want to establish a secure connection with a server, you need only deliver the encryption key to it. In order to verify you are who you say you are, the server generates a secret message, encrypts it with the key you generated, and sends it to you (see the figure below). If you pass this “test” and decrypt the message, which is only possible if you have the secret decryption key, then a secure connection has been made. Even if someone intercepts the message, you can guarantee only the person with the private key can decrypt it.

In other words, public key encryption allows for sending secret messages that are only legible by a person with the private key. If two parties have their own set of private and public keys, they can communicate with messages that are unreadable by eavesdroppers.

Process to establish one-way encrypted communication from a server to user

The 10 million dollar question: can we tweak this idea to combat Deep Fakes?

The solution: medium-term approach

Real video comes from a variety of device types, but a large portion comes from cell phones. Let’s use the iPhone as an example.

What if Apple generated a unique key pair for every unique device ID that it has knowledge of, and after establishing a secure connection during an update, delivered a single unique “public” key to your device? What if, furthermore, this key was hidden from you on your own device, and every time a video was recorded, it was simultaneously encrypted and stored? Now, in order to verify that your exact video is untampered with, you’d need to simply ping the Apple servers (hint: certificate authority) with your encrypted version, where they would then decrypt it and make sure it matches your content. In reality you’d only need to send a fingerprint, signature, or “hash”, in order to save on bandwidth, but the principle remains the same. Any device manufacturer could follow suit, and as long as you trust the security of the server that’s validating content, you now get fairly reliable proof that your video is untampered with.

In order to beat this system, a deep fake generator would now have to generate the encrypted content along with the real content. Without the key to perform this operation, it’s estimated that you’d need a 100 million qubit quantum computer to break the encryption.

Now, given this infrastructure, YouTube could simply ask Apple (or any other device manufacturer/certificate authority) to verify uploaded content, and then they could put a nice green “verified” mark under it.

The devil’s advocate would ask, “what if you wanted to edit your own videos?” Two things:

- The majority of the time you wouldn’t really need to record video in “verifiable” mode. However, when the stakes are high, e.g. in the event of a live news cast or a home surveillance system video stream, it would be worth taking these extra steps.

- With the advent of cloud computing services and ever-increasing network bandwidth, it’s reasonable to expect that you could edit video on a trusted server in the very near future that verified your raw video as input.

The solution: long-term approach

What if someone was able to hack into your device and steal the device encryption key? This is a very real concern. At this point it would indeed be possible to generate fake content, fake you as the author, and upload it to a content distribution service.

Here is where we add our final layer of security: physically embedding this key into the video sensor array itself, where it would not be visible to the device’s operating system. In order to extract the encryption key, one would have to take the device apart and render it useless, which if done correctly would destroy the key in the process. However, device manufacturers are unlikely to be able to retroactively apply this technology to devices currently out in the wild. This would be a new standard for camera manufacturing, or sensor manufacturing in general (e.g. microphones). Luckily, there are already standards in place that give us a head start.

Finally, we’ve reached the point where only the device manufacturer could validate content created by its own device. The best part: all of the technology required to make this happen exists today.

Conclusion

It’s extremely important for AI experts like us here at KUNGFU.AI to continuously strive for creating models to combat malicious content creators, and these models will always serve a purpose in providing cursory validation of digitally distributed content. However, a multi pronged approach that includes a fundamental change to content creation infrastructure may be the only way to provide strong assurance in the era of hyper-realistic misinformation.