Designing Organizations for AI-Driven Decision Making

Why Technology Isn’t the Hard Part

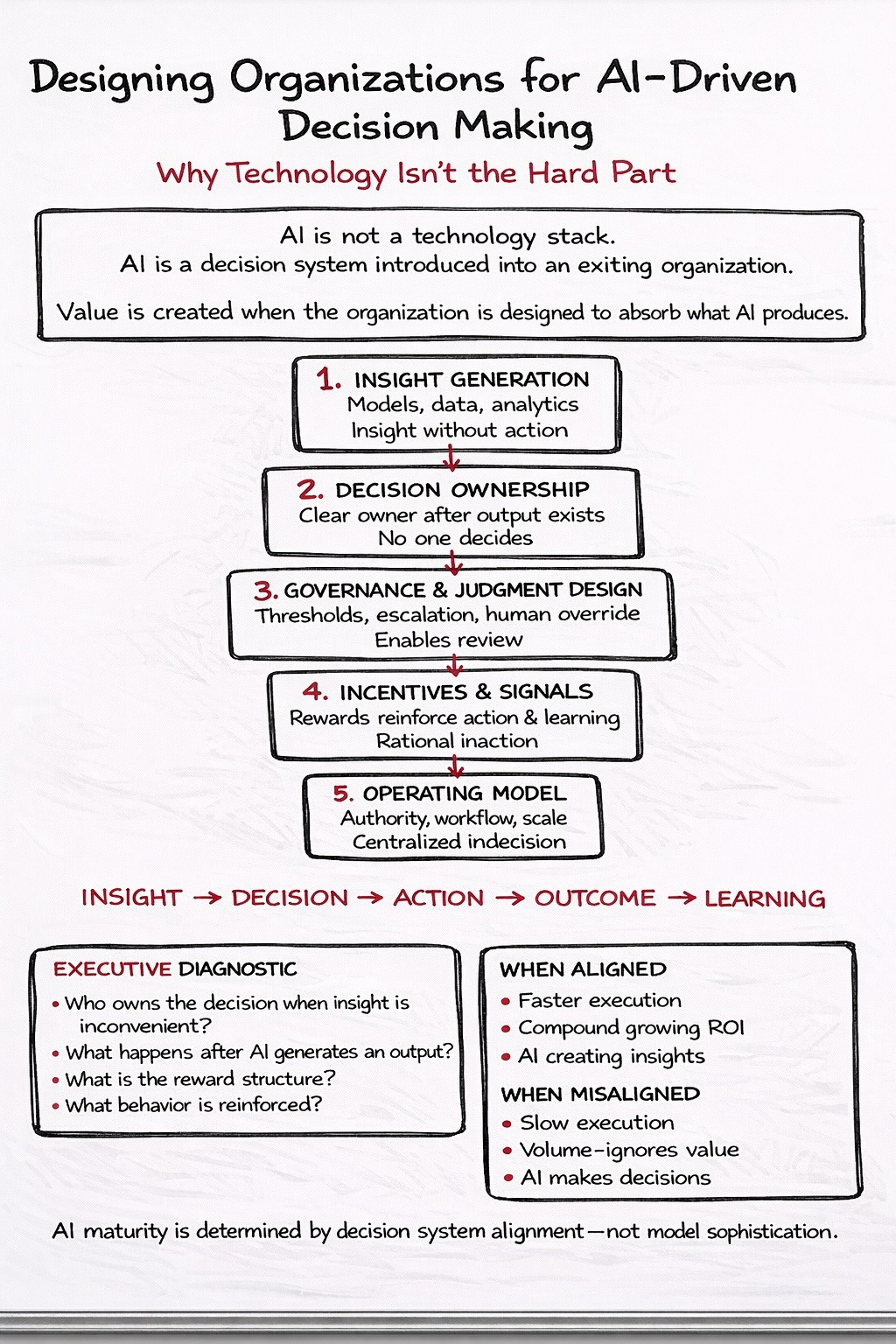

Artificial intelligence is no longer speculative. Most organizations now have access to capable models, mature platforms, and a deep ecosystem of vendors and tools. From a purely technical standpoint, the barrier to entry has collapsed. And yet outcomes vary dramatically.

Some organizations quietly compound value with AI, weaving it into everyday decision-making. Others invest heavily, run pilots, deploy models, and still struggle to see durable impact. The difference is not ambition, talent, or even the technology itself. The difference is whether the organization is designed to absorb what AI produces.

AI is not just another system to deploy. It is a decision system introduced into an organization that already has formal and informal ways decisions get made. AI does not replace those systems. It exposes them.

AI Changes How Insight Is Created, Not Where Decisions Live

AI fundamentally alters how insight is generated. Signals arrive faster. Patterns surface earlier. Probability replaces certainty. Many organizations stop there. They introduce AI into workflows without changing who owns decisions, how authority flows, how uncertainty is handled, or what behavior is rewarded. Insight moves faster, but decisions move at the same speed they always have.

This is not a technology gap. It is an organizational design gap. High-performing organizations recognize this early and treat AI not as a tooling upgrade, but as a forcing function to rethink how decisions are made under uncertainty.

Decision Ownership Begins After the Model Runs

Most organizations can name the owner of an AI initiative. Far fewer can name the owner of the decision that follows the output. Ownership is typically defined in delivery terms: who built the model, who managed the vendor, who stayed on schedule and budget. That is project accountability, not decision accountability.

Real ownership begins after the model produces an answer. It begins when the insight is uncomfortable, when it contradicts intuition, and when acting on it creates operational or political friction. If no one is accountable at that moment, the system becomes informational rather than operational.

Mature organizations are explicit about this. They name the decision, they name the owner, and they define what happens when the model is wrong and when it is right but inconvenient. AI does not remove leadership judgment. It concentrates it. If that concentration is not intentional, it diffuses back into indecision.

Governance Is What Allows AI to Move Fast

AI governance is often framed as a constraint, but in practice, good governance is what preserves speed under uncertainty. Without it, every AI-driven insight triggers the same late-stage questions: Do we trust this output? Who needs to sign off? What happens if it goes wrong?

Those questions do not disappear when governance is absent. They simply surface late, emotionally, and repeatedly, precisely when teams should be acting. High-performing organizations move these decisions upstream. They are explicit about decision thresholds, escalation paths, acceptable risk, and the confidence levels that require action. That clarity removes friction at execution time. Governance does not slow AI down. It eliminates ambiguity before it becomes a bottleneck.

Human Judgment Must Be Designed, Not Assumed

“Human in the loop” is one of the most common phrases in AI and one of the least understood. In many organizations, it becomes shorthand for caution. Outputs are reviewed, exceptions are escalated, and decisions are deferred, not because people lack judgment, but because judgment was never intentionally designed into the system.

In mature organizations, human involvement is not about approval. It is about judgment at specific points of uncertainty. Well-designed systems are explicit about when humans intervene, what signals trigger that intervention, which risks are acceptable without review, and who owns the consequence of an override. AI does not need constant supervision. It needs intentional handoffs between automation and judgment. That handoff is a leadership and systems design problem, not a tooling problem.

AI Centers of Excellence Should Design Decisions, Not Centralize Them

AI Centers of Excellence are usually created to bring order to complexity through standardization, risk management, and shared expertise. But many unintentionally become decision bottlenecks. When COEs are asked to approve use cases, arbitrate trade-offs, and own outcomes, speed suffers, local teams disengage, and decision-makers lose proximity to real-world context.

High-functioning COEs operate differently. They do not decide for the business. They design how decisions get made. They define guardrails rather than gates, standards rather than approvals, and escalation paths rather than control points. Judgment moves to the edges while risk remains visible at the center. AI does not need a centralized brain at the center of the organization. It needs a distributed nervous system with clear reflexes to respond and adapt.

Incentives Determine Whether AI Becomes Operational

Every leadership team says they want better decisions. Very few align incentives to reinforce that behavior. Most organizations still reward minimizing visible risk, preserving alignment, protecting near-term metrics, and maintaining familiar narratives. AI introduces probability, trade-offs, and early signals, often before people feel ready.

People respond rationally to the incentives around them. If acting on AI insight creates personal risk and ignoring it creates none, the outcome is predictable. High-performing organizations are deliberate. They reward action under uncertainty, value outcomes over optics, and protect learning velocity from delivery pressure. You do not get AI-driven organizations by installing models. You get them by planning, embracing patience and raising the bar for better decisions.

AI Strategy Works When the Operating Model Evolves

AI strategy does not live in a slide deck. It lives in how decisions move through the organization. Many organizations change where insight is created without changing where authority sits, which results in thoughtful work that struggles to translate into action.

High-performing organizations treat AI as a catalyst for operating model evolution. They align decision rights, governance, incentives, and workflows around probabilistic insight. This is not about reorganizations or new titles. It is about coherence. When the system is aligned, AI feels intuitive. When it is not, AI feels heavy.

The Synthesis: AI Reveals the Organization You Already Have

AI is not just a technology stack. It is a decision system introduced into an existing organization. Every organization already has a decision system, formal and informal, explicit and implicit. AI does not replace that system. It reveals it.

It surfaces unclear accountability, misaligned incentives, governance gaps, and avoidance of judgment. That is why similar technologies produce vastly different outcomes across organizations. The difference is not sophistication. It is alignment.

The leaders who succeed with AI will not simply adopt new tools. They will design organizations that can act on what AI reveals, deliberately, visibly, and systemically. That is where durable advantage is built.

Frequently Asked Questions About AI-Driven Decision Making

What is AI-driven decision making?

AI-driven decision making is the use of machine learning models and probabilistic systems to inform or trigger business decisions. It does not replace leadership judgment. It changes how insight is created and increases the speed and uncertainty with which decisions must be made.

Why do most AI initiatives fail to create durable business impact?

Most AI initiatives fail because organizations change how insight is generated without changing how decisions are owned, governed, and acted upon. The failure is usually organizational design, not technology capability.

What is the role of governance in enterprise AI?

AI governance defines decision thresholds, escalation paths, acceptable risk, and confidence levels required for action. Effective governance increases execution speed by resolving trust and accountability questions before insights reach decision-makers.

What does “human in the loop” actually mean in practice?

Human in the loop means intentionally designing when humans intervene in automated systems, what triggers that intervention, and who owns the outcome of decisions. It is a system design choice, not a generic approval process.

Do AI Centers of Excellence slow down decision-making?

AI Centers of Excellence slow down decision-making when they centralize approvals and outcomes. They accelerate decision-making when they design decision frameworks, guardrails, and escalation paths that allow judgment to remain distributed across the organization.