Don’t Poison Your Own Well with GenAI, Use it to Dig Deeper

A beginner’s guide to avoiding today’s AI pitfalls

As someone who hates clickbait (and especially AI’s growing role in it), let me start with the conclusion. Modern AI tools are, without a doubt, a force multiplier for experts in a field applying them with discretion. Modern AI tools are also, without a doubt, deeply lacking in quality without appropriate guidance. Today’s hype overvalues AI and undervalues humans. If you want enterprise-worthy AI outcomes, don’t automate your experts; augment them!

AI automation: integrating an AI solution so that a task can be completed with zero human review or intervention.

AI augmentation: integrating an AI solution so that a task can be completed with less human review or intervention

Develop with Empathy

If you listen carefully, most people pushing for GenAI automation in any given field or task are not experts in those fields. That’s exactly because these systems are great at creating things that seem good at a glance, but lack a coherent perspective and opinionated nuance. There’s a great substack post from John Gallagher that dives deeper into why this is. In this piece we’re instead going to examine how to get the best possible results with the least possible risk.

I was recently advising a company that was exploring how they might employ AI internally. I had the privilege of observing a back-and-forth between a designer and a developer – paraphrased and truncated below.

Designer: “The coding part of our pipeline takes such a long time. Lots of steps in our process are quite straightforward, can’t we hand it to AI?”

Developer: “Actually our development process is quite a bit more unique than it might seem. I’ve tried some of these tools but they just don’t get it right. I was actually thinking they’d be much better with scaling up some of our design tasks. Those seem pretty repetitive and time-consuming.”

Designer: “It just doesn’t feel right. I can tell the difference when I’ve tried handing some of my work to an LLM.”

Developer & Designer: “Oh, I guess each of us swims in deeper waters than the other can appreciate without that expertise.”

This happy ending is attributable to the fact that both individuals here are smart, trusting, and empathetic people. Unfortunately, those are not universal qualities. There have been a handful of recent headline cases detailing layoffs attributed to AI followed by an awkward walkback when quality suddenly dipped, with an optional intermediate step of massive reputational damage to the company and/or harm to its customers.

For anyone who has learned the answer to, “How hard could it be?” is often, “Actually, quite hard”; apply the same line of thinking here. For anyone who hasn’t experienced that rude awakening, consider that the vast majority think they are above average drivers. It turns out everyone around us lives rich inner lives composed of a lifetime of learning and experience. All this is to say that we may not be the best judges of quality outside of our respective lanes and numbers on a spreadsheet may not encompass the totality of human potential.

P vs. NP

I’m not going to go into the details of the P vs. NP problem, but if you want to impress people at dinner parties (at least the ones I’m invited to) know that computer scientists draw a distinction between how quickly a solution to a problem can be found and how quickly a solution to a problem can be verified as correct. I’ve found this to be a very fruitful idea to apply to GenAI.

The best uses I’ve found for LLMs are cases where generating a solution takes more effort than verifying the quality of the solution. Typically, this is because verification is easy rather than generation being that hard, but there are exceptions. Examples include: Formatting text, brainstorming options, and generating < 7 lines of code at a time.

The most frustration I’ve experienced with LLMs happens when the time it takes me to verify the tool’s solution is equal to what I would’ve spent to generate a solution myself. Typically this is because verification is hard rather than generation being easy. Examples include: pro/con lists, factual questions (when the LLM doesn’t provide references), and generating > 7 lines of code at a time. Honorable mention for at-home medical advice, which would be squarely in this category if it weren’t also utterly inadvisable.

My budding opinion is this: though experts can generate solutions more quickly, the real benefit of expertise is often the ability to verify solutions more quickly and accurately. Loosely, consider generating a solution as an avenue toward producing value and verifying a solution as an avenue toward reducing risk. By putting generative AI tools in the hands of your experts, you give yourself the best possible change for maximum value with minimal risk.

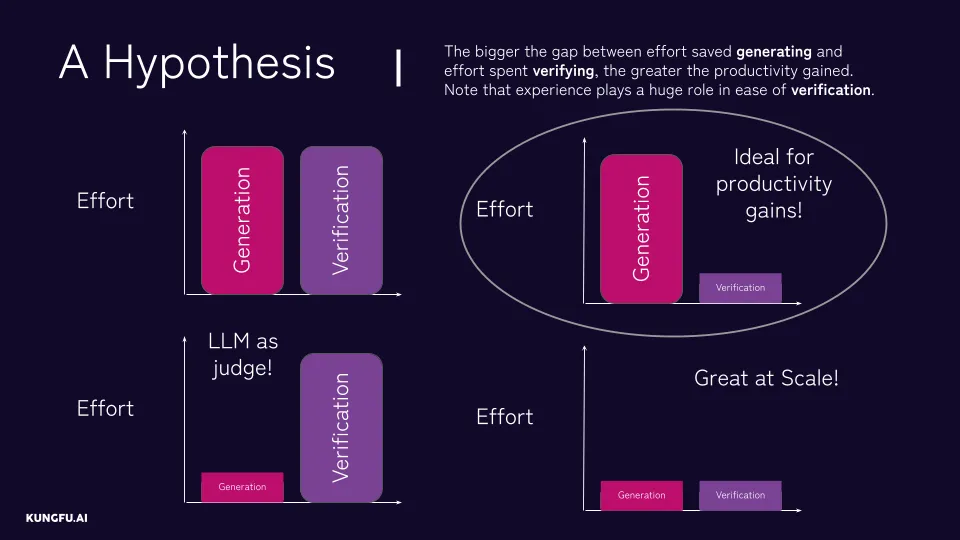

Pictured above: There are different scenarios where you might employ generative AI. In cases where answers are effortful for humans to create but simple to spot-check, generative AI can be an easy win!

This image was borrowed from an internal conversation about generative AI coding assistants, but it reinforces the idea well. The most surefire path toward realizing measurable value from generative AI is augmenting tasks where a human would need to spend considerable effort generating a solution, but can verify a solution very easily. By letting an AI generate the solution instead, you stand to save considerable time. By having an expert at the helm, you can accurately verify that solution as quickly and accurately as possible, thereby minimizing risk at minimal cost.

Next time you consult an LLM for something, think about how much time you saved by using the tool vs. how much time you spent (or would have to spend) making sure it was correct.

Tie Yourself to the Mast

In order to resist the siren song of AI automation, I recommend always asking two simple questions before overcommitting:

- Have you tested it with in-the-trenches experts?

- Quantifiably, how good was it when you tested it?

You will hear plenty of success stories from those who forewent this process, but hidden in that story is the risk taken on by skipping verification. If you spend that time, you may prove that it works and you can be certain to avoid trouble before it harms your employees, your customers, or your company. If you are unable to measure, listen to your experts. Far more often than not, your employees are intelligent, hard-working people who care a lot that the job is done well. Few others are going to be better judges of quality in their areas of expertise.

In my experience, code generation tools result in huge speedups for expert coders, but generate many dead-ends for beginners. I’ve heard excitement about AI therapists, doctors, teachers, coders, artists, musicians, lawyers, CEOs – you name it. But if that excitement is coming from someone who hasn’t walked a mile in those shoes, consider tightening your bowline knot* and asking questions before diving in.

* In getting draft feedback I’ve already been informed that a bowline on a bight may be more appropriate for tying oneself to a mast. Consider this a great example of exactly what I’m talking about, though the lack of knot and nautical (knotical?) knowledge is my own and not an LLM’s.