The Future of Artificial Intelligence and Mobility was Unveiled 9/12/18

A lede was buried at Apple’s new product announcement event this past week. While the headlines were focused on the phones, a far more significant announcement was made around the future of artificial intelligence. Starting late September, the supercomputer in our pockets becomes a blank canvas for artificial intelligence practitioners to create the future.

It starts with horsepower.

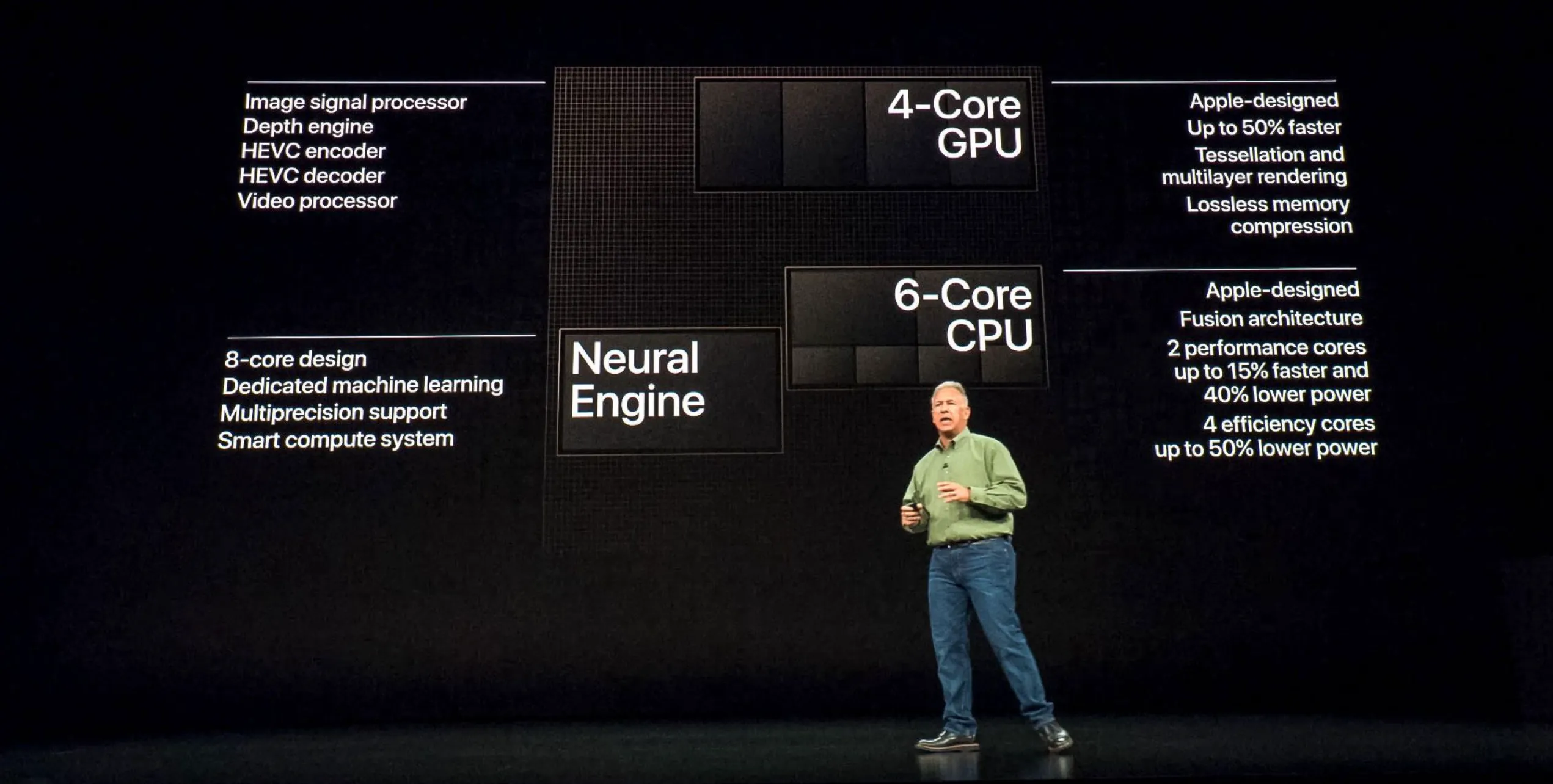

Like every year, Apple announced a better processor inside every new model with mind boggling capabilities. The A12 Bionic chip, a 7-nanometer chip includes six cores (two performance cores and four high-power cores), a four-core GPU, and a neural engine — an eight-core dedicated machine learning processor. This neural engine can perform 10X the operations per second than the previous model (five trillion operations per second, compared to 500 billion for the previous generation). That’s not all. There is a smart compute system that automatically determines whether to run algorithms on the processor, GPU, neural engine, or a combination of all three. Now every new iPhone has capability to handle a variety of computationally intensive workloads like understanding speech commands, taking better photos and recognizing unhealthy heartbeat patterns. And this all runs on device with an amazing array of cameras and sensors on a power budget that allows these new phones' batteries to last even longer than previous generations. According to Creative Strategies analyst Ben Bajarin, now “Apple has the lead when it comes to AI in mobile devices”.

A new palette for AI.

But the biggest news is that Apple announced that for the first time third party developers will be allowed to run their own algorithms on this AI-specific hardware. In addition, Apple opened their existing CoreML platform, granting access to the iPhone’s neural engine (previously it was exclusively used to power the iPhone’s Face ID and Animoji features). Now developers can access the horsepower and tools to build AI capabilities into their apps.

Examples:

Apple says the new neural engine helps the new phones take better pictures, performing up to a trillion operations per photo. When a user presses the shutter button, the neural engine runs code that tries to, among a myriad of other things, quickly figure out the kind of scene being photographed, and to distinguish a person from the background, to optimize the shot.

HomeCourt - an app that uses AI to record and track basketball shots, makes, misses, and location - analyzes video from iOS devices to automatically track and log data for predictive and prescriptive analytics. CEO David Lee says being able to tap the new version of the neural engine has made his basketball app HomeCourt much better.

Imagination required.

Apple granted the world the tools required to dream up ways to build AI smarts into their applications. Apple’s Craig Federighi also announced Create ML, an new tool for iOS development, as “a set of training wheels for building machine learning models ”. The possibilities are really exciting. Let me be technical for a moment.

CreateML is integrated into playgrounds in Xcode 10 so you can view model creation workflows in real time.

Just add a few lines of Swift code to leverage our Vision and Natural Language technologies and create models optimized for the Apple ecosystem for a variety of tasks:

- regression

- image classification

- word tagging

- sentence classification

- analyzing relationships between numerical values

You can even use your Mac to train models from Apple with your custom data.

An example:

You can train a model to recognize cats by showing it lots of images of different cats. After you’ve trained the model, you test it out on data it hasn’t seen before, and evaluate how well it performed the task. When the model is performing well enough, you use CoreML to integrate it into your iOS application.

The new CoreML 2 lets you integrate a broad variety of machine learning model types into your app. In addition to supporting extensive deep learning, it also supports standard models such as tree ensembles, SVMs, and generalized linear models.

It also integrates IBM Watson Services. CoreML 2 supports:

- computer vision

- natural language processing

- decision trees

Development teams, data scientists, and business leaders have the opportunity to team up and dream up ways to apply these new capabilities to their applications. The challenge now is coming up with use cases and developing strategies on how to leverage these amazing new capabilities.